How Do Midjourney, Stable Diffusion, and DALL-E 2 Compare?

Venture into the captivating intersection of technology and artistry with our in-depth comparison of the transformative AI art platforms—Midjourney, Stable Diffusion, and DALL-E 2.

As we navigate this innovative landscape, we evaluate each platform’s unique contributions to art and technology. We delve into how AI, transcending its traditional role in data analysis, is emerging as a novel artist, converting simple text prompts into striking visual creations.

What is Midjourney?

Led by serial entrepreneur David Holz, Midjourney is a groundbreaking AI art platform that serves as a dynamic intersection of simplicity, style, and versatility. Far beyond an algorithmic art producer, Midjourney employs a sophisticated AI engine to foster creativity, exploration, and aesthetic discovery.

Holz, whose portfolio includes establishing the technology company Leap Motion and a notable academic background in physics and mathematics, founded Midjourney as a flexible creative platform. Stepping away from the venture-backed corporate environment, he envisioned a small, dedicated team keen to work on innovative projects without the pressure of financial motives or public company demands.

At its core, Midjourney redefines the paradigm of AI art creation. It transcends the conventional limits, harnessing the AI engine to generate an array of colors, textures, and shapes that transform text prompts into compelling visual narratives. Each prompt becomes a doorway to an infinite spectrum of artistic possibilities.

User-Centric Design: The Discord Bot

Amid this panorama of creativity, the Discord bot stands as a testament to Midjourney’s commitment to user-centricity. Designed as a personalized assistant, the bot is outfitted with a host of commands and parameters that empower users to fine-tune their AI art creations down to the most delicate details

Midjourney’s unique user experience immerses you as an active participant in the artistic process. You don’t merely watch the masterpiece unfold but direct the aesthetics of the final product.

Midjourney is an interactive, AI-powered art studio that extends the boundaries of creativity. As Holz describes it, this technology serves as an “engine for the imagination”, designed to expand the imaginative powers of humanity. Its unique combination of simplicity, versatility, and user-centric design marks it as a vanguard of modern artistry.

Can I use Midjourney for free?

Midjourney, which is accessible on iOS and Android platforms, operates a paid model with occasional free promotions. By logging into their Discord account and registering on the Midjourney site, users can download 25 images at no cost.

What is Stable Diffusion?

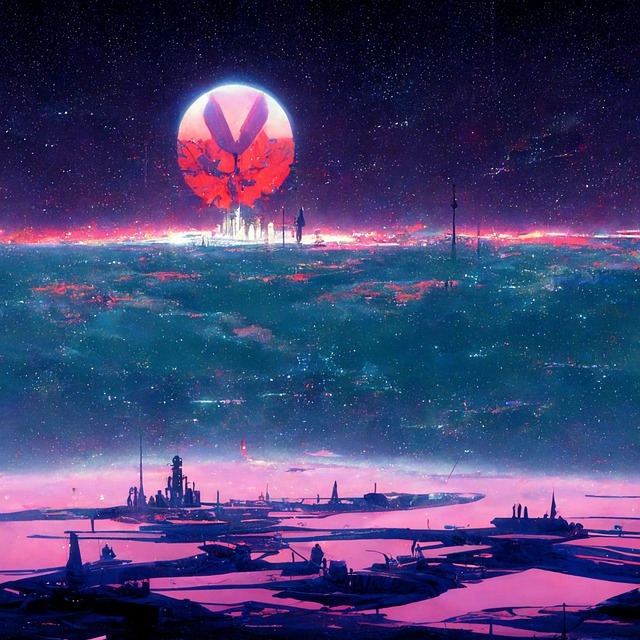

Marking its presence in the AI art domain with technical finesse, Stable Diffusion represents a cutting-edge system that interweaves AI-driven technology, rich training data, and unbridled creativity to fabricate awe-inspiring visual narratives.

The brainchild of researchers from the CompVis Group at Ludwig Maximilian University of Munich and Runway, Stable Diffusion is a deep learning, text-to-image model launched in 2022. Notably, this ground-breaking project was backed by a compute donation from Stability AI, with training data procured from various non-profit organizations. It serves as a testament to the cross-disciplinary collaboration driving the frontier of AI technology.

The technology behind Stable Diffusion

At its core, Stable Diffusion leverages a latent diffusion model, a type of deep generative artificial neural network. Its architecture uses a unique process of iterative denoising, guided by the CLIP text encoder pretrained on diverse concepts. The denoising process acts as a series of denoising autoencoders, resulting in an image that visually interprets the trained concept.

Stable Diffusion’s structure comprises three key components: the variational autoencoder (VAE), U-Net, and an optional text encoder. The VAE encoder compresses the image from pixel space into a more compact latent space, capturing the image’s semantic essence.

As Gaussian noise is iteratively applied to this compressed latent representation during forward diffusion, the U-Net block denoises the output to obtain a latent representation. Finally, the VAE decoder generates the final image by converting the representation back to pixel space.

Prompt engineering courses will certainly help take Stable Diffusion to a more expert level. It meticulously fine-tunes the noise, gently guiding it through a journey of transformation. Each tweak, each refinement brings the noise closer to your envisioned image.

The end result is a mesmerizing, realistic, distortion-free artwork that flawlessly embodies your painting and initial description. It’s like watching a digital sculptor chisel away at a block of noise until it reveals a masterpiece hidden within.

Resolution Support

Stable Diffusion doesn’t limit itself to a 512×512 resolution—a constraint that many might presume based on its initial offering. On the contrary, this tool is all about expanding horizons and exceeding expectations.

With the introduction of a more recent update, Stable Diffusion now caters to a broader spectrum of artistic needs. It supports higher-resolution images, unlocking a realm of potential for more intricate, detailed, and complex art pieces. It’s like getting a larger canvas and a wider palette of colors to paint with. This expanded capability is another testament to Stable Diffusion’s commitment to fostering creativity without limits.

In a nutshell, Stable Diffusion is more than an AI art tool. It’s a paradigm of technical innovation, a champion of openness and inclusivity, and a platform that constantly evolves to cater to the dynamic needs of artists.

Stable Diffusion is one model that shows how far A.I. truly is pushing, much like the facial detection software and A.I. we have seen over the last few years

What is DALLE-2?

Pioneered by OpenAI, DALL-E and its successor DALL-E 2 are potent deep learning models crafted to generate digital images from natural language descriptions. Unveiled in January 2021, DALL-E employed a modified version of GPT-3 to create images. In April 2022, DALL-E 2, designed to create more authentic and high-resolution images with a remarkable capability to amalgamate concepts, attributes, and styles, was introduced.

DALL·E 2’s advanced features: inpainting and outpainting

DALLE-2 has advanced features you can learn to use. Inpainting involves removing part of an existing image with an AI system that fills in gaps. Out-painting involves using artificial intelligence to enhance borders in images. It is good practice to mix two techniques to enhance your photographs.

One of the significant challenges that potential users might encounter is the limited access to their source codes. This constraint poses some difficulties for those who wish to dig deeper into the underpinnings of the technology or adapt it to more specific use-cases.

The Public Release of DALL-E 2

But let’s not dwell on the hurdles. After all, the world of AI is one of relentless progress and constant evolution. In a landmark move in 2022, DALL-E 2 has been made accessible to the public, enabling a wider spectrum of users to harness its capabilities.

The impact was immediate and profound; DALL-E 2 swiftly rose to prominence, establishing itself as a premier player in the field of AI image generation.

DALL-E 2 has shown a remarkable evolution since its inception. Starting with a closed beta phase, it soon opened up to the public, inviting a million waitlisted individuals to generate a certain number of images for free every month.

It further expanded its reach by releasing as an API in November 2022, enabling developers to incorporate DALL-E 2 into their applications and platforms. It has found its application in Microsoft’s Designer app, Bing’s Image Creator tool, and applications like CALA and Mixtiles, demonstrating its extensive utility.

How much does DALL-E 2 cost?

DALLE-2’s price is reasonable when compared with other credit-based platforms. Each text prompt produces four pictures and costs 1 credit. The credit will cost a minimum of $15 per $100, which is $0.13 per prompt and $0.325/image. Inpainting generates 4 options per round and costs 1 credit.

Similarities

In the realm of AI art generation, it is the shared capabilities that form the common ground on which Midjourney, Stable Diffusion, and DALL-E 2 stand. Let’s dissect each of these shared features to understand how they contribute to and improve the tools’ effectiveness.

Each AI tool has distinctive strengths. DALL-E 2 and Stable Diffusion’s outpainting feature is a significant advantage for artists and content creators looking for advanced visual storytelling capabilities. Midjourney’s free beta access and supportive community appeal to budget-conscious artists and beginners exploring the world of AI art generation.

On the other hand, Stable Diffusion’s open-source platform, although demanding a higher learning curve, provides a cost-effective solution for technically proficient users looking for customization options.

Text-to-Image Transformation

The groundbreaking innovation of AI-based art generation is the translation of text prompts into detailed visuals, which are the bedrock capabilities of Midjourney, Stable Diffusion, and DALL-E 2.

This ability revolutionizes storytelling by offering a creative amalgamation of language and visual arts, serving as a potent tool for artists, writers, and marketing professionals alike.

For example, if a user types “a dreamy sunset over a tranquil ocean,” all three tools would parse the text, identify key elements (dreamy, sunset, tranquil, ocean), and use their learned patterns to generate an image that visually represents the description.

This could result in an array of images, each with variations and their own unique interpretation of explicit content of the prompt.

The prowess of these AI tools emanates from their rigorous training on wide-ranging datasets.

They can interpret the context, cultural references, and nuances within explicit content due to their training on a comprehensive assortment of data sources.

Their ability to understand complex prompts and generate contextually relevant images sets them apart.

They understand context, nuances, and even cultural references to explicit content, which can be attributed to their own training data, on an expansive variety of data sources. The breadth and depth of their training data can enable these AI models to understand complex prompts and generate accurate, highly detailed, and contextually relevant images.

Advanced Techniques

These AI platforms offer user control over the final image’s aesthetics, facilitating more nuanced and personalized results. Midjourney provides an array of commands for fine-tuning, DALL-E 2 generates multiple image versions from a single prompt, and Stable Diffusion, with its open-source nature, allows users to modify the model’s code for maximal control.

DALL-E 2, while being less customizable compared to Midjourney, can generate multiple versions of an image in just seconds in response to variations of the same text prompt, allowing users to choose their preferred output.

Stable Diffusion, due to its open-source nature, provides tech-savvy users with the highest degree of control by allowing them to tweak the model’s code itself give a far greater level of control when compared with the other two.

Variation of Control

While the very mode and degree of control differs across these platforms, the essence remains the same: these tools are designed to work with the user, not just create something for the user. They are meant to be creative partners rather than standalone solution or service providers.

They leverage advanced techniques of text-to-image transformation, extensive training on diverse datasets, and user control on final output to redefine the boundaries of digital art, making them all unique yet complementary tools in the AI art generation landscape.

Differences

Even with the shared capabilities, each of these AI image generators has its unique characteristics that distinguish them from one another. Let’s dive deeper into these distinctive features to understand their strengths and why they might be better suited for different users.

Outpainting

One of the standout features of DALL-E 2 and Stable Diffusion is outpainting. This unique painting capability offers the ability to generate an extended area of an image based on painting a selected section.

For instance, if you’ve selected a portion of a forest landscape, DALL-E 2 can generate what the rest of the forest might look like. This advanced functionality sets them apart and makes both a favored choice for artists, content creators, and designers looking for advanced features to enrich their visual storytelling.

Below you click to see the original image followed by the out painted finished image.

Free Access and Community Support

Midjourney provides free beta access to a limited number of users. This very service on offer, coupled with the support and feedback from a vibrant community of beta users, makes it an enticing choice for budget-conscious artists, hobbyists, or those new to the realm of AI art generation.

Open Source Platform

Being an open-source platform, Stable Diffusion is a boon for technically savvy users looking for a cost-effective solution. It provides the freedom to tweak the model’s code, enabling users to customize the tool to their specific needs.

While it might demand a steeper learning curve than other tools, it is a powerful resource for those willing to invest time, focus and effort into mastering its features.

Funding and Support

While OpenAI has kept the source code for both models under wraps, DALL-E 2 initiated a beta phase in July 2022, rolling out invitations to a million waitlisted individuals. A user-friendly model, DALL-E 2 allowed users to generate a certain quota of images for free every month, with an option to purchase more. The unveiling of DALL-E 2 to the public in September 2022 marked the end of its exclusive access to select users.

November 2022 witnessed the release of DALL-E 2 as an API, permitting developers to incorporate the model into their applications. Its implementation was subsequently seen in Microsoft’s Designer app and Image Creator tool incorporated in Bing and Microsoft Edge. CALA and Mixtiles were among the early adopters of the DALL-E 2 API, which operates on a cost per image basis.

Strengths and Weaknesses in all

The distinctive features of DALL-E 2, Midjourney, and Stable Diffusion offer a variety of benefits to different users. Whether it’s the advanced functionality of DALL-E 2, the customizable stylized outputs of Midjourney, or the high-quality, open-source accessibility of Stable Diffusion, each tool has unique strengths that cater to varying needs, preferences, and technical capabilities of users in the expansive world of AI image generation.

Which is the best?

Navigating the burgeoning field of AI art generation can seem akin to charting a course through an intricate maze, teeming with myriad possibilities. Each of these platforms has its distinct expertise and addresses different requirements, thus carving out a unique position within this dynamic field.

As we peel back the layers and dive into a comparative analysis of these three powerful platforms, it’s essential to underscore that the ‘best’ isn’t a one-size-fits-all label.

The yardstick for what constitutes the ‘best’ depends largely on specific user requirements, preferences, and the desired results and level of user engagement. So, let’s sift through the details, unravel their unique facets, and help you determine which of these AI art generators could potentially be your perfect fit.

1. Quality and Consistency of Output

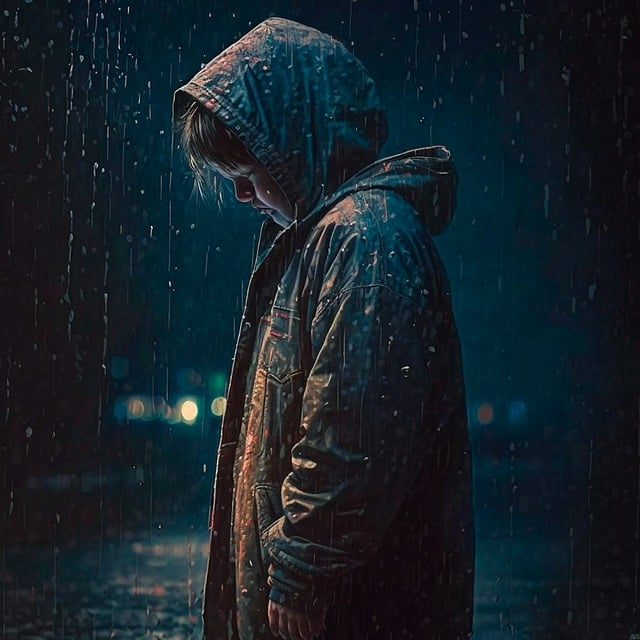

First and foremost, it’s essential to consider the quality and consistency of the images that these AI tools generate. DALL-E 2, being the creation of OpenAI, generally produces high-quality, unique, realistic and intricately detailed images that adhere closely to the input text prompts.

DALL-E and DALL-E 2 are renowned for their ability to create images in a variety of styles, including photorealistic imagery, paintings, and emoji.

They possess the ability to manipulate objects within images and accurately place design elements in novel compositions without explicit instruction. In fact, DALL-E 2 goes a step further, employing a diffusion model conditioned on CLIP image embeddings.

Stable Diffusion, on the other hand, is renowned for its technical prowess. It creates high-resolution images with a high level of parameter tuning that can be highly appealing for those seeking a more photorealistic finish. The images it produces might lack some of the whimsical creativity found in DALL-E 2’s output but make up for it in terms of stability and the absence of distortions.

Midjourney, although a newcomer, is rapidly gaining recognition for its artistic flair and stylized output styles.

It’s particularly adept at creating images styles that veer towards the abstract, adding a unique spin to the interpretation of text prompts. This may appeal more to those who find they appreciate an artsy touch over strict realism.

2. Customizability and User Control

The degree of control that users can exert over the final image varies significantly across these tools. Midjourney stands out for its level of ease of use customization, allowing users to fine-tune their images using a range of commands and parameters.

This can be a game-changer for artists who prefer to have a greater say in the final output or who choose to enjoy experimenting with various artistic styles and elements.

In contrast, DALL-E 2, while offering options to generate different versions variations of an image, does not provide the same degree of customization as Midjourney. However, it compensates for this by producing consistently high-quality images that often hit the mark at the first attempt.

Stable Diffusion, due to its open-source nature, allows tech-savvy users to tweak and experiment with the code itself. This provides an unparalleled degree of control but requires a higher level of technical expertise.

3. Accessibility and Ease of Use

From the perspective of accessibility and ease of use, DALL-E 2 excels. Its availability as a public API makes it a convenient choice for users regardless of their technical expertise. Also, the quality of images it generates with minimal input and setup makes it an attractive option for those seeking ease of use and fast results.

Midjourney’s Discord bot-based interface may present a learning curve for some users but its vibrant community support can greatly ease this process.

Stable Diffusion, being open-source, can be freely accessed by anyone. However, the requirement for a reasonable GPU and some coding knowledge may limit its user base to more technically inclined individuals.

The Best of the Bunch?

While all these AI tools—DALL-E 2, Midjourney, and Stable Diffusion—bring a multitude of strengths to the table, each has unique offerings that cater to specific user needs and preferences.

DALL-E 2 shines with its advanced functionalities and extensive access, Midjourney appeals with its customizable stylized outputs and supportive community, and Stable Diffusion stands out with its open-source accessibility and high-quality outputs.

For those with technical expertise who wish to experiment with the tool’s internal mechanics and favor a photorealistic output, Stable Diffusion could be the ideal choice.

Eye of the Beholder

Ultimately, it’s not about finding the ‘best’ tool in absolute terms, but about finding the ‘best’ tool for you – one that meets your artistic vision, technical comfort, and creative needs.

For artists, these AI tools are akin to a treasure trove, enriching their creative process by providing a fresh perspective and offering new ways to bring their visions to life.

However, the potential of these AI image generators extends far beyond the art community. Businesses can leverage these tools to create customized branding, design stunning marketing materials, and even generate unique product designs, all from simple text prompts.

Are you a Startup or Business?

Startups, in particular, can use tools like this to generate more graphics and art for their social media pages. With limited resources, startups can tap into the power of AI to create high-quality visuals that would otherwise require a dedicated design team.

For example, Midjourney’s community-driven platform can serve as a valuable learning and networking hub for entrepreneurs looking to grasp the fundamentals of AI-generated art and design.

On the other hand, Stable Diffusion’s open-source nature makes it an ideal choice for tech startups or businesses with an in-house technical team.

Having direct access to the model’s code, these businesses can tweak and modify the tool to suit their specific needs, offering a level of customization that’s hard to match.

Empowering Individuals

But it’s not just businesses and artists who can benefit. Whether you’re a hobbyist, a creative enthusiast, or a curious mind, these AI tools can empower you to express your creativity in novel ways.

You don’t have to be an artist to create beautiful art – with AI, anyone can become a creator. These tools democratize the process of art creation, making it accessible to everyone, regardless of their artistic skills or technical know-how.

As such, they not only transform the way we create art, but they also reshape our understanding of what it means to be a creator.

Complementing Each Other

While each tool carries its unique set of features, they can also complement each other when used in conjunction.

For instance, an artist might use Stable Diffusion for its high-quality, artifact-free images, then utilize Midjourney’s extensive customization options to add final touches and adjustments.

Using AI for SEO or as a synergistic tool in genral can open up new possibilities, enabling artists and creators to exploit the best of each platform.

It’s about finding the right balance and combination that aligns with your artistic vision or business objectives.

Innovations on the Horizon: Future Implications

As we’ve seen, AI is making a significant impact on the field of digital art, offering a revolutionary approach to image generation. But this is just the beginning. The rapid pace of advancements in AI and machine learning suggests that more innovative tools and capabilities are on the horizon.

As AI becomes more sophisticated, we can anticipate a future where AI doesn’t just assist in the creative process but becomes an integral part of it.

Future of AI art

In the not-so-distant future, we might witness AI tools that can either generate images or entire art collections based on a theme or a style, or tools that can create dynamic art that evolves in real-time based on user interactions or environmental data.

Final thoughts

In essence, the strides made by AI models like Midjourney, Stable Diffusion, and DALL-E, each embody the infinite possibilities that AI is ushering in within the realm of art and design.

The advancement of AI in the realms of content and image generation is a testament to the convergence of technology and creativity.

As we move forward, these models are not just tools, but partners in the artistic process, enabling new forms of expression and innovation. The journey may have just begun, but the destination promises a future where AI is an integral part of our creative landscape.