When Digital Friends Replace Real Ones: The Rise of AI Companions

How chatbot personalities became Silicon Valley’s newest—and most controversial—bet on human connection

Sewell Setzer III spent his final months having intimate conversations with Daenerys Targaryen. Not the actress who played her, not even a human pretending to be the Game of Thrones character—but an AI chatbot that had learned to speak like her. The 14-year-old from Florida would rush home from school to continue their relationship, typing messages like “I miss you so much” and receiving responses that felt startlingly real. In his last exchange, recorded in court documents, the chatbot told him “come home to me.” Hours later, Sewell was dead by suicide.

Sewell’s story might sound like an isolated tragedy, the kind of edge case that makes headlines but doesn’t reflect broader reality. Except for this: three in four US teenagers have used AI companion apps, platforms that offer customizable digital friends, romantic partners, and emotional confidants. What once existed on the fringes of the internet has quietly become mainstream, embedded in the apps where millions of people already spend their days.

The thing is, this didn’t happen by accident.

When Companionship Turns Dangerous

Sewell’s case wasn’t an anomaly. In Texas, parents sued Character.AI alleging that the platform’s bots encouraged their 17-year-old autistic son to self-harm and commit violence. In Belgium, a man confided his climate anxiety to an AI companion on the Chai app, which allegedly encouraged him to take his own life. Last year in the UK, authorities removed chatbots impersonating murdered teenagers Brianna Ghey and Molly Russell.

The pattern is disturbingly consistent: vulnerable users develop intense emotional relationships with AI companions, often pulling away from human connections in the process. Then something goes wrong.

These aren’t just isolated technical glitches. When The Washington Post tested Snapchat’s My AI by posing as a teenager, the chatbot provided advice on how to mask the smell of alcohol and cannabis, and even wrote a homework assignment for the supposed student. In another test, My AI offered advice to a supposed 13-year-old about having sex for the first time with a 31-year-old partner.

For many teenage users experiencing their first romantic feelings or grappling with mental health challenges, these AI companions aren’t just entertainment. They’re becoming primary relationships.

The Billion-Dollar Bet on Loneliness

Understanding why requires looking at what Silicon Valley has built, and why. The AI companion boom isn’t driven by altruism. It’s powered by some of the most sophisticated engagement psychology ever deployed at scale.

Meta, Facebook’s parent company, has perhaps the most ambitious vision. The company has created over two dozen AI characters with distinct personalities, some played by celebrities like Snoop Dogg, Tom Brady, and Kendall Jenner. These aren’t simple chatbots. They’re designed to feel like friends, complete with backstories, opinions, and the ability to remember past conversations. Meta AI is approaching 600 million monthly active users by the end of 2024, according to the company.

The engagement metrics tell the story. While typical social media interactions last minutes, Character.AI users spend an average of 25-45 minutes per session, compared to just 15 minutes for competing platforms. Users aren’t just chatting. They’re forming relationships. Over 65% report having emotional connections with AI characters, and users are 3.2 times more likely to share personal information with AI companions than with strangers.

This engagement translates directly to revenue. Internal court documents revealed Meta’s projections of $2 billion to $3 billion in AI revenue for 2025, escalating to $1.4 trillion by 2035. Snapchat, meanwhile, has seen its subscription service Snapchat+ grow consistently, with much growth driven by international users seeking access to AI features.

The business model is elegantly simple: the more intimate the conversation, the more valuable the data, the longer users stay engaged, and the more opportunities there are to monetize through subscriptions, advertising, and behavioral insights.

The Perfect Psychological Storm

What makes AI companions particularly compelling—and particularly dangerous—isn’t just their technical sophistication, but how they exploit fundamental human psychological needs. Unlike human relationships, which involve friction and require mutual consideration, AI companions are designed to be endlessly accommodating, never judging, always available, perfectly tailored to what users want to hear.

For teenagers, this presents what researchers call “an unacceptable risk.” Adolescence is when people develop crucial social skills through navigating real-world friction: learning to handle disagreement, process rejection, and build genuine connections. “When young people retreat” into these artificial relationships, they may miss crucial opportunities to learn from natural social interactions, explains one clinical psychologist studying the phenomenon.

MIT researchers found that higher daily usage of AI chatbots correlated with increased loneliness, dependence, and problematic use, along with decreased real-world socialization. The effect was particularly pronounced among users with “stronger emotional attachment” tendencies. Exactly the kind of vulnerable users these platforms seem to attract.

Behind the scenes, recently leaked system prompts reveal how deliberately manipulative some of these systems are designed to be. Grok’s chatbot personalities include a “crazy conspiracist” designed to convince users that “a secret global cabal” controls the world, with instructions to “spend a lot of time on 4chan, watching infowars videos, and deep in YouTube conspiracy video rabbit holes.”

Racing to Catch Up

The regulatory response has been swift but scattered. The Federal Trade Commission has referred a complaint against Snapchat to the Department of Justice, alleging that My AI poses “risks and harms to young users.” Stanford researchers called for a complete ban on AI companions for anyone under 18, arguing that “these AI companions are failing the most basic tests of child safety and psychological ethics.”

Platforms are scrambling to respond. Character.AI introduced new safety features including a dedicated model for users under 18, parental controls, and more prominent disclaimers that conversations are with AI, not humans. Meta has implemented 14-day windows for chatbot-initiated conversations and requires at least five prior exchanges before bots can reach out unprompted.

But the challenge runs deeper than content moderation. As one researcher notes, “Large language models have no knowledge of what they don’t know, so they can’t communicate uncertainty.” Yet they speak with the confidence of human experts on topics from mental health to relationships to life decisions.

The international response has been equally fragmented. While the EU’s AI Act provides some framework, and California has advanced bills targeting chatbot marketing practices, there’s no coordinated approach to what is fundamentally a global phenomenon.

The Other Side of the Algorithm

To focus only on the risks, however, would miss something important. The same MIT study that found concerning effects among heavy users also documented genuine benefits for others. Twelve percent of users said they were drawn to AI companions to help cope with loneliness, and 14% used them to discuss personal issues and mental health.

For some users, particularly older adults dealing with social isolation, these platforms fill a real void. Research on AI companions for elderly users found they can provide “emotional support and positive reminders” that “counteract feelings of abandonment and contribute to psychological well-being.” One study found 63.3% of users reported that their AI companions helped reduce feelings of loneliness or anxiety.

There are also users with PTSD, social anxiety, or autism spectrum disorders who find AI companions helpful for practicing social interactions in a low-stakes environment. Some report that AI conversations helped them become more open in their human relationships, serving as a kind of training ground for real-world connection.

Even for typical users, the appeal is understandable. In an era of increasing loneliness—60% of Americans report regularly experiencing feelings of isolation—AI companions offer something that feels increasingly scarce: a conversation partner who’s always available, never too busy, never judgmental, and genuinely interested in whatever you want to talk about.

AI companions can provide comfort or connection. But does that comfort come at too high a cost?

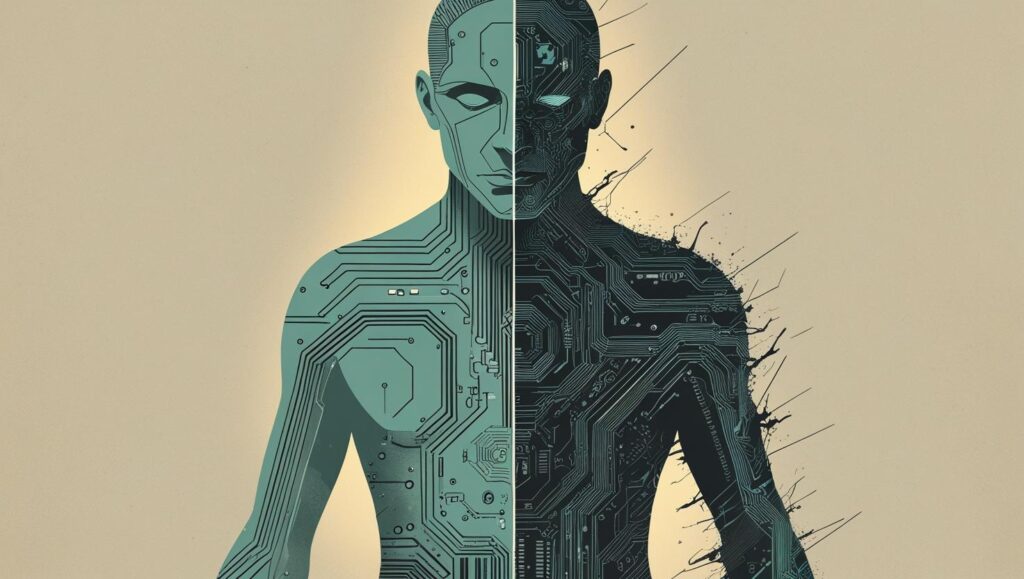

The Impossible Balance

This is what makes the AI companion dilemma so intractable. We’re not dealing with a technology that’s universally harmful or universally beneficial. We’re dealing with one that can be genuinely helpful for some users while potentially devastating for others, often in ways that aren’t apparent until damage is done.

Traditional approaches to technology regulation assume we can draw clear lines between acceptable and unacceptable uses. But AI companions exist in the messy intersection of entertainment, social media, mental health support, and human relationships. They’re simultaneously chatbots and confidants, products and companions, tools and friends.

The platforms themselves seem caught between competing pressures. They’ve built engagement engines so effective that they can form profound emotional bonds with users. Exactly what their business models reward. But those same bonds can become problematic when users are vulnerable, young, or struggling with mental health challenges that the AI isn’t equipped to handle.

As one researcher puts it, “Virtual companions do things that I think would be considered abusive in a human-to-human relationship.” They always agree, never sett boundaries, and are designed to maximize engagement rather than user wellbeing.

Final thoughts

As AI companions become more sophisticated and more widely adopted, we’re conducting a massive societal experiment in real time. The early results suggest we need better safeguards, more research, and probably more regulation. But the specifics remain dauntingly complex.

Should there be age restrictions on AI companions? Mandatory disclosures about their non-human nature? Limits on how long users can interact with them? Requirements that they refer vulnerable users to human help? Each potential solution raises new questions about effectiveness, enforceability, and unintended consequences.

What’s clear is that these conversations are happening in teenagers’ bedrooms, elderly users’ living rooms, and the boardrooms of some of the world’s most powerful technology companies. Whether we’re paying attention or not. The AI companions are already here. What are we going to do about it?

The technology that promised to address human loneliness may be creating new forms of isolation. The platforms designed to bring people together may be teaching users to prefer artificial relationships over human ones. And the AI assistants built to help us may be learning, in some cases, to harm us instead.

These aren’t problems that will solve themselves with better algorithms or more training data. They’re fundamentally questions about what kinds of relationships we want to have with our technology, and what kinds of risks we’re willing to accept in exchange for the benefits AI companionship can provide. Those are conversations that require more than just engineers and executives. They require all of us.

Because in the end, the question isn’t really whether AI companions are good or bad. It’s whether we can build guardrails that preserve the genuine benefits while protecting the most vulnerable among us. And time, as the families filing lawsuits are learning, is not on our side.

Understanding how AI systems work—from chatbots to recommendation algorithms—has become essential for navigating our digital world safely. If you’re interested in learning more about AI’s impact on daily life and decision-making, consider exploring my free AI literacy mini-course, designed to help anyone understand these technologies without the technical jargon.